LHC program participants at the Santa Barbara Harbor.

LHC program participants at the Santa Barbara Harbor.

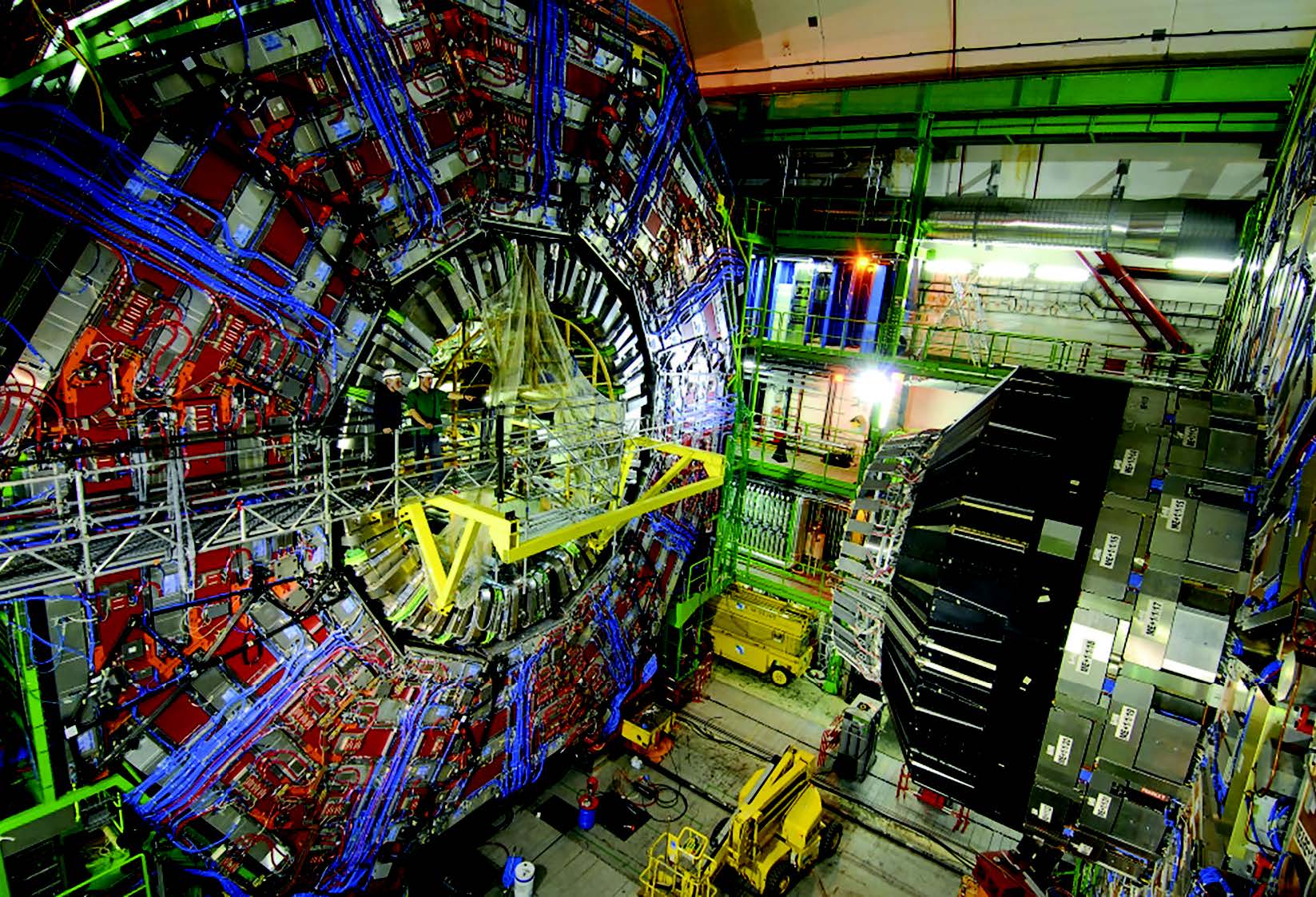

High-energy physics has entered a new era of discovery as the Large Hadron Collider (LHC) at the CERN laboratory has begun colliding protons at an energy almost 7 times greater than the best previous machine. The LHC occupies a 27-kilometer circumference tunnel outside Geneva, Switzerland. A few enormous particle detectors, weighing up to 14,000 tons, record proton collisions at various points around the ring. They were built by collaborations of thousands of experimentalists, who also analyze the data they collect.

The first run of the LHC, from 2009 to 2012, culminated with the announcement of the discovery of the Higgs boson particle on July 4, 2012. This discovery led to the award of the 2013 Nobel Prize in Physics to Francois Englert and Peter Higgs, who predicted this particle over 50 years ago. The Higgs boson was the last missing building block completing the Standard Model of particle physics -- in fact it is the centerpiece. On the one hand, the Standard Model (SM) successfully describes essentially all subatomic experimental phenomena, and has proven to be extremely robust against all experimental tests. On the other hand, it cannot account for dark matter, and it leaves many conceptual puzzles unexplained.

In 2015 the LHC began colliding protons again, at almost twice the energy of the first run. In this ongoing Run II, the detectors have now recorded more collisions than were collected in all of Run I, and the probability of making the Higgs boson, or many other new particles, is much greater than at the lower energy of Run I. The LHC will operate for another decade or two, collecting over a hundred times as much data as it has so far, enabling many more discoveries beyond the Higgs boson.

For the first time in the history of particle physics since the introduction of the SM in 1972, we are searching only for truly new and unexpected signals of new physics, i.e. phenomena not described by the SM. However, finding new physics is like finding a needle in a haystack. Even the Higgs boson is only produced once in every billion proton collisions. We need to understand the haystack (the SM) incredibly well. That is, we need to be able to predict the probabilities for producing many different kinds of particles in proton collisions at the LHC, within the rules of the SM, to high precision, to match the high experimental precision that is being achieved.

The Compact Muon Solenoid detector at CERN’s LHC. Image courtesy of CERN.

The Compact Muon Solenoid detector at CERN’s LHC. Image courtesy of CERN.

Proton collisions at the LHC are exceedingly complicated. Protons are not simple particles, but are bags containing many constituents, quarks and gluons. Proton collisions are really collisions of quarks and gluons, but even the description of these collisions requires highly complex calculations in quantum field theory (QFT), the framework that unites quantum mechanics and Einstein’s theory of special relativity. Quark and gluons interact with each other through the theory of the strong nuclear interactions, quantum chromodynamics (QCD). Quarks interact with the rest of the Standard Model through the electroweak (EW) interactions, forces that are mediated by the photon and its two super-heavy cousins, the W and Z bosons (the former is responsible for some kinds of radioactive decays). And quarks and gluons do not exist in isolation; they are always bound into particles called hadrons, the most familiar of which is the proton. When they emerge from collisions at the LHC, they do so as collimated sprays of hadrons called jets.

Theorists use the rules of QFT and the SM to compute the many possible outcomes of LHC collisions to high precision. These computations are becoming very sophisticated, involving hundreds of thousands of the diagrams invented by Richard Feynman. The progress has been breathtaking: what once was thought impossible to compute is now commonplace. Yet this improvement is necessary to match the high precision of the experimental data, including the determination of properties of the Higgs boson. With the energies reachable in Run II of the LHC, new EW effects are becoming important, which previously could be ignored. Theorists are also involved in determining the initial conditions for the collisions, that is, the composition of the proton in terms of quarks and gluons. And they produce detailed probabilistic simulations, called Monte Carlo programs, which describe effects in the final stages of the collision, that is, how various particles decay and how quarks and gluons materialize into jets of hadrons.

The KITP program in Spring 2016, LHC Run II and the Precision Frontier, brought together a diverse group of theorists – experts in all of the above areas – to scrutinize all aspects involved in making precise LHC predictions. We also interacted closely with participants of the concurrent KITP workshop on the experimental challenges at the LHC, which allowed for comparisons between state-of-the-art theory predictions and experimental results. The theorists were able to not only provide guidance to their experimental colleagues, but also gained a better understanding of where further theory improvements are still needed.

Our program’s activities have resulted already in 41 (and counting) papers submitted to professional journals, manuscripts in preparation, review articles or working group reports. Much of this research involved newly formed collaborations among participants of the workshop. The KITP proved to be the perfect environment for further advancing the precision frontier to the stage needed to enable both measurements and discoveries at Run II of the LHC.

- Radja Bougezhal, Lance Dixon, Frank Petriello, Laura Reina, and Doreen Wackeroth; LHC Run II and the Precision Frontier Program Coordinators

KITP Newsletter, Fall 2016