Image by Kevin Son / Daily Nexus.

Image by Kevin Son / Daily Nexus.

Humans harbor an extraordinary auditory capacity: the cocktail party effect. We can tune out background noise in a room full of conversation and focus on the speaker we intend to converse with. We can tune out the constant tick-tock of a clock in a room just as we can ignore a car’s motor running while driving. At the same time, we can instantly tell when a car makes a peculiar noise or a glass breaks at a dinner party.

The way the human brain is able to analyze a complex auditory setting by focusing on one particular stimulus while filtering out a range of other stimuli remains a mystery.

The KITP program “Physics of Hearing: From Neurobiology to Information Theory and Back” held during the summer of 2017 integrated scientific research from different subject areas in order to make sense of the information theory of complex auditory signals. “The goal of the program was to foster new collaborations and projects between physicists and neurobiologists that work on hearing and scientists who use machine learning to analyze and process speech,” program coordinator Tobias Reichenbach, a senior lecturer in the department of bioengineering at the Imperial College of London, said.

A significant volume of research has clarified the biophysical mechanisms through which the inner ear is able to encode sound stimulation into neural signals that are subsequently processed in the auditory brainstem and cerebral cortex. However, there is still a lack of understanding on how a complex auditory scene is broken down into its individual, natural signal such as speech.

In an effort to better understand the connection between the ear and brain at a deeper level, the eight-week program examined many different perspectives, including “the interdisciplinarity between physicists, neurobiologists and speech-recognition engineers who were all interested in speech and hearing,” Reichenbach said.

“We were really lucky to have top-notch researchers from across the globe who came eager to share their unpublished work and to engage ideas outside of their field of interest,” program coordinator Maria Geffen said. Geffen is an assistant professor at the University of Pennsylvania who has built the Laboratory of Auditory Coding that explores neural mechanisms of auditory processing.

Physicists are particularly interested in the biophysics of the inner ear, as they are able to replicate this in a front-end machine learning speech recognition, helping to improve performance. Furthermore, they show curiosity of the neural mechanisms through which sound is processed since they can mirror these mechanisms in artificial deep neural networks.

Similarly, neurobiologists are showing interest in machine-learning approaches because they allow them to analyze datasets that are obtained from multi-cellular recordings. They are also intrigued by artificial neural networks and are attempting to see how the computational actions of artificial neurons in such networks compare to those of living neurons.

The program looked at how biology and technology work together. There have been many advancements with hearing technology, such as the cochlear implants as well as voice-recognition devices.

“We discussed how to make them work better in hearing noise, listening to music, more sophisticated aspect of sound processing,” Geffen said.

Therefore, maintaining collaboration with professionals from many disciplines is fundamental in analyzing how the brain processes complex sounds and replicating this mechanism in hearing aids and voice-recognition devices.

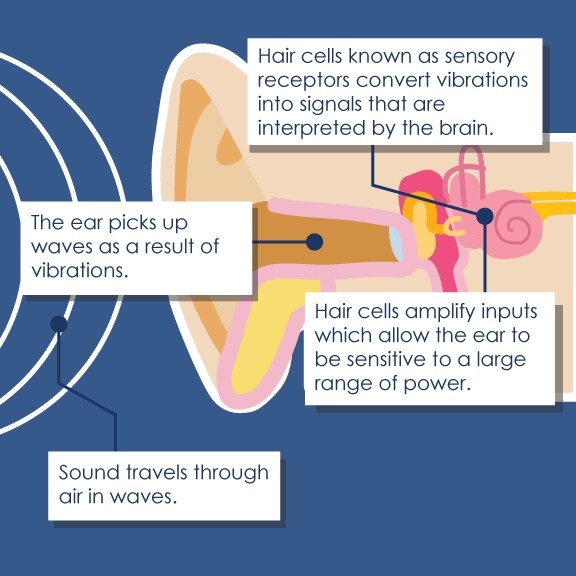

The sense of hearing is an intricate process that consists of multiple actions and reactions in order to work. Sound travels through the air in waves that are picked up by the ear as a result of vibrations. Hair cells on the ear called sensory receptors convert the vibration into signals that are interpreted by the brain. These hair cells are of particular interest to scientists due to the limited understanding of their operation as well as their degeneration, which causes many hearing impairments for a large population of people.

Hair cells employ a mechanical active process to amplify the signal inputs, allowing the ear to be sensitive to sounds in a large range of power. In the same way, as the active process decreases, so does the ear’s sensitivity to differences in frequency of sound, which leads to the ear lacking the ability to distinguish the different sources of sounds.

“Lack of hearing is a very important problem; most of us, if we live long enough, are guaranteed to lose some hearing. When we lose hearing, our ability to detect sound is lost, but more so, we lose the ability to hear sound in the presence of noise,” Geffen said.

By hosting the physics of hearing workshop, the KITP was able to foster collaboration among various disciplines in order to comprehend the complex mechanisms of the auditory process and build upon potential ideas to cultivate growth for the future.

“What we wanted to do is bring people across the full spectrum, from people who study speech processing and speech recognition — for example, from Google, where they try to recognize speech, words and text — to people who study biological processes of hearing — either humans or in animals — in the central auditory system and people who study dynamics of the cochlear inner ear,” Geffen said. “Our hope was that by learning about what people do in speech recognition, we can better design stimuli to use in our experiment and understand neural aspects of auditory systems and participants will be able to start collaborations with many researchers.”

- Erin Haque, Student Writer at the UCSB Daily Nexus.

KITP Newsletter, Fall 2017

Related Talk:

|

Click above to play How Hearing Happens or watch at https://www.youtube.com/embed/Q2YGi-dq36w