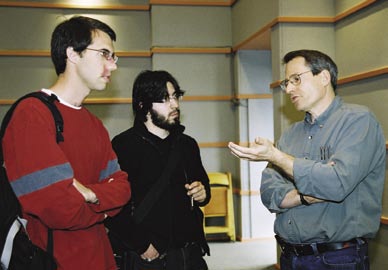

Richard D. Eager (l) and Dan Malinow, both first year physics students at UCSB, chat with Caltech's John Preskill after his “pedagogical lecture” on “Fault Tolerant Quantum Computation.” “Pedagogical lectures” are a distinctive feature of the KITP program “Topological Phases and Quantum Computation.” Photo by Nell Campbell.

Richard D. Eager (l) and Dan Malinow, both first year physics students at UCSB, chat with Caltech's John Preskill after his “pedagogical lecture” on “Fault Tolerant Quantum Computation.” “Pedagogical lectures” are a distinctive feature of the KITP program “Topological Phases and Quantum Computation.” Photo by Nell Campbell.

"Interdisciplinary" is a word that has gotten a lot of press in the past decade’s reporting on prospects for scientific discovery. The presumption is that the action is occurring not just at the boundaries of disciplines, but also at the intersections where the boundaries of two or more disciplines overlap. Such is surely the case with topological quantum computing, as the KITP program (Feb. 21 to May 19) has dramatically illustrated.

Its roughly 80 participants represent the confluence of four streams of research. There are condensed matter theorists who are experts on the fractional quantum Hall effect and other possible topological phases of matter. There are condensed matter experimentalists studying fractional quantum Hall states in gallium arsenide devices. There are also theorists studying quantum computation: some coming from an atomic physics background; others studying quantum information in the abstract. Straddling all of these groups are the proponents of topological quantum computing, a small core group which is collaborating intensely with experts from the other areas and attracting converts.

The use of topological phases represents an approach to quantum computing which is so radically different from the other approaches that the overlap between the people pursing this approach and the other approaches has indeed been small.

The sustained opportunity for sharing ideas is one reason for the dynamism and distinctiveness of the program, according to one of its organizers, condensed matter theorist Chetan Nayak, a participant in Microsoft's topological quantum computing project temporarily lodged at the KITP and scheduled to move into the new UC Santa Barbara building housing the California NanoSystems Institute (CNSI). The other two organizers are Sander Bais from the University of Amsterdam’s Institute for Theoretical Physics and Caltech’s John Preskill, whose May 3 public lecture “Putting Weirdness to Work: Quantum Information Science” provided a snapshot of the quantum approach.

One distinctive feature of both groups of quantum computing researchers is the overlap they represent between condensed matter physics, especially theory, and mathematics.

Said Nayak, “Many people have come here who study quantum computing abstractly and, in many cases, have been thinking about other realizations of quantum computers not involving topological phases.” That latter group, according to Nayak, has come to the KITP program to learn, for instance, “the connections between their kind of error correcting codes and what a topological quantum computer does.”

Perhaps the key strength of the topological approach to quantum computing is that the underlying physical system automatically corrects and protects against errors.

“These people,” said Nayak, “who have worked on the problem of error correction codes with respect to the other approaches to quantum computing based on spin states and trapped ions, see the topological approach as an exciting 'outside the box’ approach. People realize the difficulties involved in building a quantum computer are great, to say the least, so as a result some people think a linear progression isn’t necessarily going to get us there. We have to try something sneaky if we want to build a quantum computer, and this is such an idea.”

Genesis of Quantum Computing

Quantum computing arrived on the scene in a big way with Bell Labs’ Peter Shor’s 1994 discovery of an algorithm for finding the prime factors for large numbers via a hypothetical quantum computer. He said, in effect, here is what a quantum computer could do fast that a classical digital computer can’t (unless it can run for decades, centuries, millennia).

Who cares about the ability to find the prime factors of large numbers? The answer is the vast national security enterprise engaged in codes and code-breaking.

Conventional computing operates in a binary mode with a bit of either 0 or 1. What is different and makes quantum computation a potentially richer computational approach is that it takes advantage of the multiplicity of quantum states to encode not just one piece of information (for instance whether the spin of a particle is up or down), but more, such as the superposition of particle spin states. (Quantum mechanically, particle spin isn’t necessarily up or down, but in a state that is [classically speaking] a combination of up and down states.)

Shortly after Shor’s proof of usefulness, he and others began tackling the problem of error correction. The problem is that quantum information is delicate, so one must take very seriously the problem of correcting errors. Since the error correction process can itself introduce errors, the rate of errors must be low initially for the whole enterprise to work.

The KITP hosted a four-month program in 1996 “Quantum Computing and Quantum Coherence,” which catalyzed developments in the newly emerging field of quantum information processing because it enabled the pioneers, including Shor, to get together for the first time for sustained intellectual exploration. Another program in 2001 (“Quantum Information, Entanglement, Decoherence and Chaos”) again enabled the principals to gather for a slightly longer five-month stretch.

Those two earlier programs focused on approaches to quantum computing other than topological phases because that approach had yet to coalesce into a set of ideas to ground a program. A key difference between the earlier programs and the current one reveals a key difference between the approaches. The earlier programs, especially the first one, focused on, in effect, software issues, especially error correction, while the latter program is focused essentially on hardware.

The titles of both early programs underscore what was emerging as perhaps the principal stumbling block to the other approaches to quantum computing: “decoherence,” the seemingly insurmountable problem of sustaining evanescent coherent states of a collective of quantum “particles,” especially when trying to scale up to a real computer. Quantum systems generally decohere very rapidly; consequently, it is difficult to keep the rate for this type of error low.

Other approaches to quantum computing have essentially analogized digital computing. Since the spin of an electron understood classically as up or down is understood quantum mechanically as any possible superposition of up/down, particle spin has, for instance, by analogy been envisioned as a candidate for the qubit. And a collection of spin qubits could encode vastly more information than a collection of classical bits. In contrast, with a topological phase, the qubit is not a single electron, but rather a collective excitation of the whole system, which is inherently stable because of its topology.

Such an excitation can be useful for quantum computing when it is an “anyon,” which exists only in two-dimensional space and has strange properties, such as “fractional statistics,” ranging “anywhere” (thus its name) between bosonic and fermionic statistics (See box, page 3).

In 1997, Alexei Kitaev (then at the Landau Institute in Moscow and now with Microsoft’s quantum project, and a KITP program participant) wrote a “brilliant” paper explaining, according to Nayak, “how non-abelian anyons would have built-in error correction. I don’t think,” said Nayak, “that many people besides Michael Freedman [a topologist with a Fields Medal and head of the Microsoft project] realized that the Kitaev paper was a work of landmark importance. Alexei,” said Nayak speaking about Kitaev’s expertise in mathematics and physics, “is one of those peculiarly Russian geniuses whose conceptual insights fuse mathematics and physics.”

The next important development in the direct line of topological quantum computing came in a paper by Freedman and two mathematicians from Indiana University, Zhengang Wang (a member in the Microsoft project) and Michael Larsen. Wang, like his PhD mentor Freedman, is a topologist, and Larsen is an expert on the representation theory of groups. Their papers demonstrated that a large class of non-abelian anyons have just as much computational power as ordinary qubit quantum computing. As Freedman, Kitaev, and Wang showed, a topological quantum computer has no more power than any other quantum computer; therefore, the two approaches are equally powerful computationally. The big advantage of topological quantum computing is its very low error rate.

What is a topological phase?

Said Nayak, “Topology is the study of shape irrespective of length and angle. So, for a topologist, the doughnut really is a coffee cup because, even though the handle is small compared to the cup and a doughnut is all handle, by stretching and deforming the doughnut you can make it into a coffee cup, and vice versa. Topologists are people who forget about all the details and look at the really important key features of a geometrical shape. Focusing on key features frees one from focusing on details wherein errors occur. With the storing of important physical information in topological features such as shape, small errors in the geometry [like a blip in the ceramic surface of a cup, which doesn’t interfere with the cup’s mission as container of liquid] don’t matter.”

Translated to a physical system, a topological phase is one in which the electrons organize themselves in a state such that the collective state of all the electrons doesn’t care about minor details. The only example in nature (at least so far) is the fractional quantum Hall effect. However, the hunt is on for topological phases in other systems.

Discovered in the early 1980s by experimentalists Dan Tsui and Horst Stormer, and explained by theorist Robert Laughlin, the fractional quantum Hall effect occurs when electrons are cooled to low temperature and put in high magnetic fields. They organize themselves in a highly correlated state in which the ground state and low-energy excitations of the system don’t care about any local perturbations. The effect showed up in measurements of electrical resistance that is quantized, and the resistance doesn’t depend on details of the device (fabricated out of very high quality gallium arsenide initially made by UCSB’s Art Gossard, then at Bell Labs), so the effect is robust.

“It turns out,” said Nayak, “that is exactly the kind of physical system one is looking for in quantum computing.”

Braiding

He described computation based on such a physical system as “taking one excitation around another or around several others,” which is called “braiding.” Because of the system’s robustness against details, said Nayak, “it doesn’t really matter whether one excitation takes a perfect circular path around the other one or a wiggly path or even pauses to take a break and then completes the transit. All that matters is that it goes around the other. Therefore the error rate associated with such an operation is essentially zero.”

Said Nayak, “When you have a bunch of these excitations, it turns out that there isn’t a unique ground state of the system with those excitations present.” Rather there exists a rich manifold of states determined by the topology of the system and the excitations. Information can be stored and manipulated in this manifold of states. In the system that is most exciting experimentally, what most closely corresponds to a qubit, said Nayak, “is the presence or absence of a neutral fermionic excitation associated with a pair of electrically charged excitations."

“When the charged excitations are far apart, the fermion isn’t really localized anywhere, so no local measurement you could do, or that the environment can do, is going to be able to tell whether it is there or not, and that’s essentially where the protection is coming from. When these two excitations are far apart, the information is delocalized over the whole system. Th at is a miraculous thing; not only is it very beautiful mathematically and conceptually, but it may also be useful for something,” said Nayak.

The charged excitations and the delocalized neutral fermion are examples of “quasiparticles” in the fractional quantum Hall effect. As a result of the neutral fermions, the charged quasiparticles are non-abelian anyons. According to Nayak, “Many of us believe that non-abelian anyons of this type exist in the 5/2 fractional quantum Hall state,” discovered in 1987 by some of the drop-in experimentalists attending the program: Robert Willett of Lucent, Jim Eisenstein (now at Caltech), and Horst Stormer (now at Columbia), together with their collaborators.

KITP Gives Birth to ‘Anyon’

Nayak did his PhD at Princeton University under Frank Wilczek, a particle theorist then at the Institute for Advanced Study in Princeton and now at MIT. Wilczek, who shared the 2004 Nobel Prize with his Princeton University thesis advisor, KITP director David Gross, served from 1980 to 1988 as the first permanent member at the KITP, where he first dabbled in condensed matter theory. In 1982 Wilczek coined the term “anyon” to describe quasiparticles in two-dimensional systems whose quantum states range continuously between fermionic and bosonic.

Nayak’s 1996 thesis research with Wilczek focused on understanding the behavior of possible non-abelian anyon excitations in the 5/2 state. They didn’t know it, but what Nayak and Wilczek were uncovering is, in today’s language, the qubit structure for topological quantum computing.

It turns out that the 12/5 state is an even better place to do quantum computing than 5/2, but it is also a more delicate state.

A specific scheme for how to do quantum computation in the 5/2 fractional quantum Hall state came late in 2004 in a paper Nayak co-authored with Freedman and a condensed matter theorist who operates close to experiment, Sankar Das Sarma of the University of Maryland, also a program participant. Their architecture is fairly concrete, and serious experimental efforts are underway to realize it.

In intermittent attendance at the three-month-long program, and in more sustained fashion at its concluding five-day conference (May 15 to 19), are condensed matter experimentalists, including representatives of the four groups who are now engaged in friendly competition to make that device out of gallium arsenide. Another program drop-in, Loren Pfeiffer, heads the Lucent facility for making gallium arsenide devices of the quality required by the experiments.

With developments in topological quantum computing representing the now rapid convergence of many types of theoretical and experimental expertise, one of the key features of the KITP program “Topological Phases and Quantum Computation” has been the pedagogical Thursday afternoon sessions, designed to facilitate an expert in one field learning from a lecture by an expert in another field, in a relaxed atmosphere that encourages attendees to ask the kind of “stupid” questions they might be wary of venturing in a more formal seminar setting.

To get up to speed on the subject, Nayak said, “You can’t learn about it in a book. The only way for somebody on the outside of this game to become an insider is to talk a lot to the right people, and they are [mostly] here.”